@Work Software

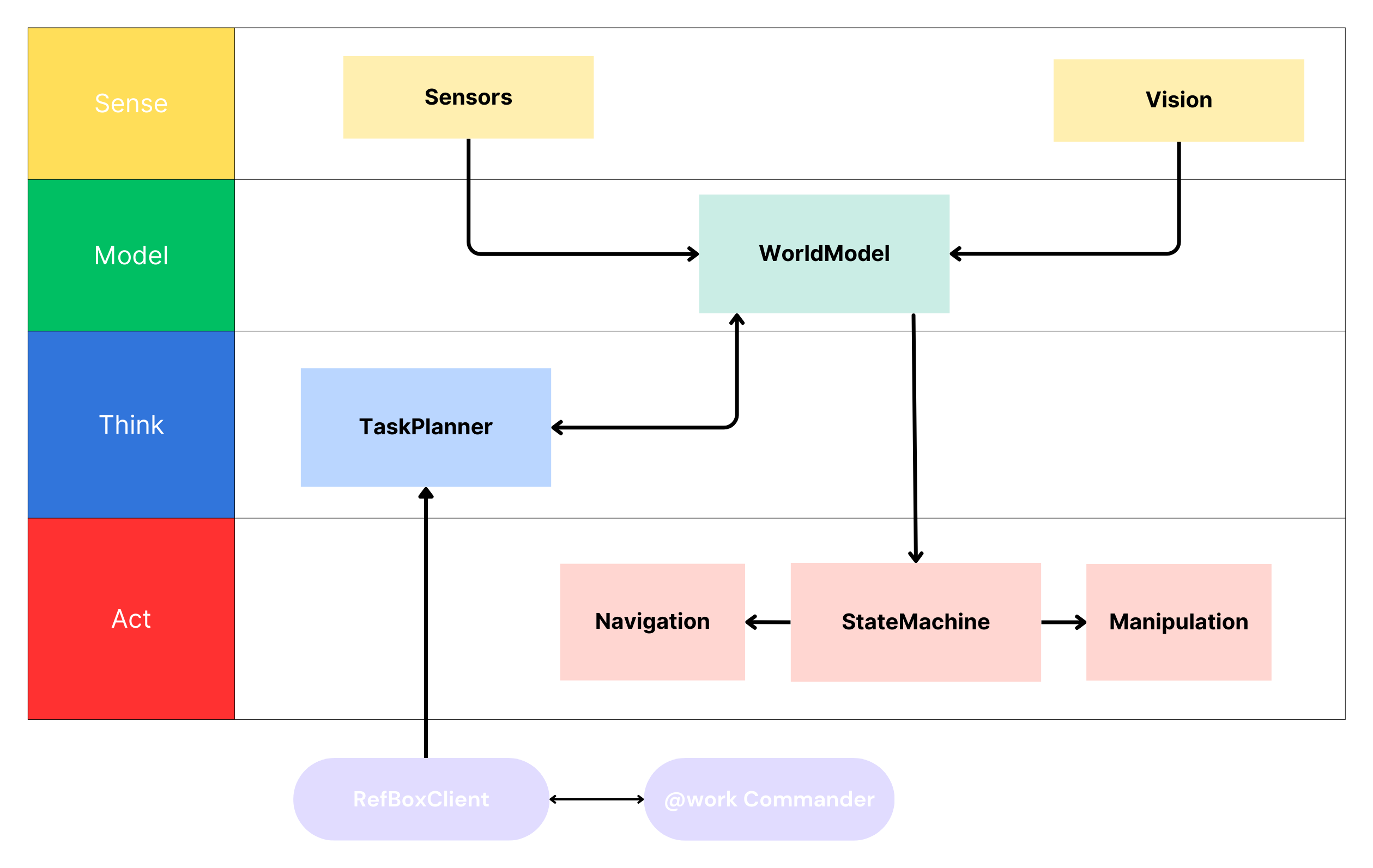

Sense, Model, Think, Act.

The purpose of software for a robot is to provide the intelligence and capabilities necessary for the robot to perceive, act, and interact effectively in its environment, enabling it to perform tasks autonomously.

The System

Our software stack is built on the Robot Operating System (ROS), specifically using the ROS1 Noetic release. ROS provides a range of powerful tools for developing and debugging robotics software, including visualization tools like rviz and rqt.

Our System consists of a hierarchical architecture where different nodes communicate with each other using ROS’s communication layer called tcpros. This communication allows the nodes to exchange information and coordinate their actions. Within this architecture, we have implemented a hierarchical system that extends from the Refereebox Connection to rudimentary operations (RO). The ROs are defined as individual actions that are independent of external factors. To implement these actions, we use ROS action servers, which enable the robot to receive updates on current tasks and cancel them if necessary.

The ROs encompass various navigation and manipulation actions that the system needs to perform. By organizing the system into this hierarchical structure and utilizing ROS’s communication and action server capabilities, we have been able to create a modular and flexible robotics software stack.

Our software follows a “Sense-Model-Think-Act” model as its fundamental concept. It categorizes all software into one of the four parts, which helps define the possible interactions with other system components.

In the “Sense” part, sensors and nodes gather information from the data, providing details about the environment and the robot’s current state to the WorldModel.

The WorldModel serves as the “Model” by mapping and storing the processed data. Both the “Think” and “Act” parts access the WorldModel to base their decisions on the current internal representation of the environment.

The “Think” part includes the task planner, which converts the information stored in the model and the tasks provided by the RefBoxClient into internal tasks that the robot can execute.

The execution of these tasks is handled by the “Act” system, which is further divided into the “Navigation” and “Manipulation” parts. Navigation focuses on driving the robot around the arena, while Manipulation deals with the robot arm’s interaction with workstations. Both Navigation and Manipulation are triggered by the StateMachine.

Software Modules

Sense

In object recognition, we already use a neural network to distinguish between different workpieces. We also aim to perform object localization from depth images using a neural network. For this purpose, a suitable network architecture needs to be selected, training data needs to be collected, and the network needs to be trained.

Model

In the World Model, all data from the run and the environment are stored and made available to other parts of the program as needed. Since an error in the World Model can lead to incorrect robot behavior, robustness and completeness are crucial. To ensure this, test scenarios need to be developed that cover all possible situations.

Think

In the Task Planner, the task to be executed is broken down into smaller components, and an optimal sequence of actions is created. Our Task Planning utilizes a graph-based search approach. At each step, all known service areas are considered as potential navigation tasks. The objects on the back of the robot (up to three are allowed) serve as potential placement tasks, and the objects on the service area are considered as grasping tasks.

Act

The state machine in our system is implemented using C++ and the Behaviour Tree CPP library. The Behaviour Tree CPP library is specifically designed for programming and executing behavior trees. Behavior trees have advantages over traditional state machines as they are easier to read and debug.

The Software-Team

Franck Fogaing Kamgaing

Nesrine Bouraoui

Dorra Oueslati

Daniel Le

Felix Piepenbrink